As an undergrad, I was on the Penn Formula SAE team (site not up to date) where I worked on the electronics and eventually was the subteam lead for the 2011 season. Our team consisted of Ryan Kumbang, Karan Desai, Nisan Lerea, and myself (+tons of help from alums and help from Keith and Praveer). Our team had historically one of the most custom electronic systems (self made CAN, boards, etc...). I was in charge of making sure our shift (pneumatics), clutch (linear actuator), ECU, etc...operated smoothly.

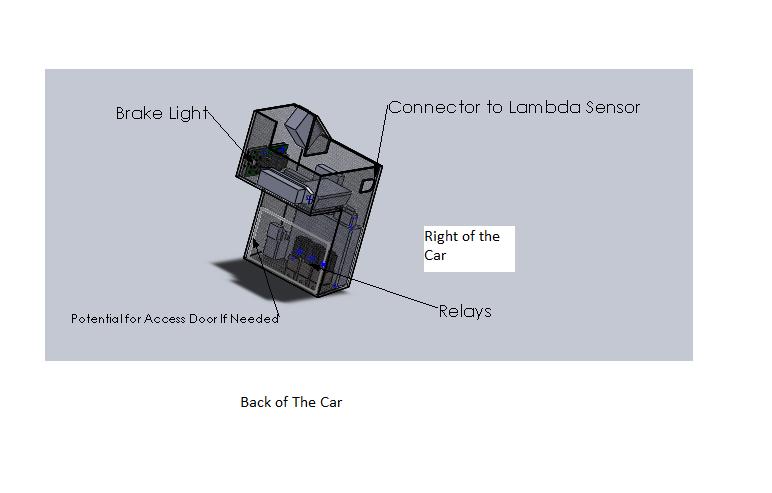

In order to save on weight and space, we condensed all the major electronics components besides the motors/pistons/steering wheel into a little box on the back of the car.

In order to save on weight and space, we condensed all the major electronics components besides the motors/pistons/steering wheel into a little box on the back of the car.

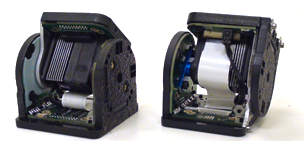

Most of our electronics system is based off of the same CAN-based technology used in Modlab on the CKbots to make it modular. These board were designed in Eagle (PCB CAD software) and shipped to 4PCB for printing. The overall system relies on "brain" boards and "utility" boards. "Brain boards" as shown below consist of a PIC microcontroller for logic and a CAN connector for bus communications. Each "brain" board is then paired with a secondary board underneath to provide it with specific capabilities, such as clutch, steering wheel, shifting, and overall system logic for the car. In total 8 boards were used on the car that were simple to swap out in case of failure or if additional features were needed.

Some test footage of our car:

I worked in Modlab for several years developing software for their CKbots. The CKbots are modular robots that allow us to create arbitrary robot configurations (2 examples below from the Modlab site). Our software was an API designed to enable developers to create applications and behaviours for the CKbots. CKbots could either be controlled individually or in "clusters", for instance, one could specify a cluster of 3 robots to be an "arm" and have the arm follow a predefined motion rather than controlling individual CKbot motions:

I worked on earlier versions of the CKbots that communicated via CAN-bus, but required manual screw connections. There were some versions that used magnetic faces to connect and disconnect automatically, but I did not interface with those versions.

I implemented a series of high- and low-level software interfaces in Linux for the CKbots, primarily in Python and C (for the embedded portions) and worked closely with Dr. Shai Revzen and Jimmy Sastra. Enabled specification of gaits, combining gaits, monitoring CAN bus, etc... For more general information about the CKbots: modlabupenn.org/ckbot.

The communication between modules was based off of the Robotics Bus Protocol detailed here.

In undergrad I was also a member of the UPennalizers RoboCup SPL team. The RoboCup SPL league is an international competition where ~24 teams compete in robotic humanoid soccer using a standard platform, the Naos.

Here I worked primarily on the vision systems for the Nao robots, making sure they could see the (correct) ball, localize, etc. I implemented features such as multi-line detection using Hough transforms and horizon detection using odometry. I was the vision lead for the 2010 competition in Singapore where we made it to the quarter-finals (and beat many of the other top teams!) See a sample goal from the competition below.

For more information about the team and competition visit our site here.

For more information about our software system read our report.

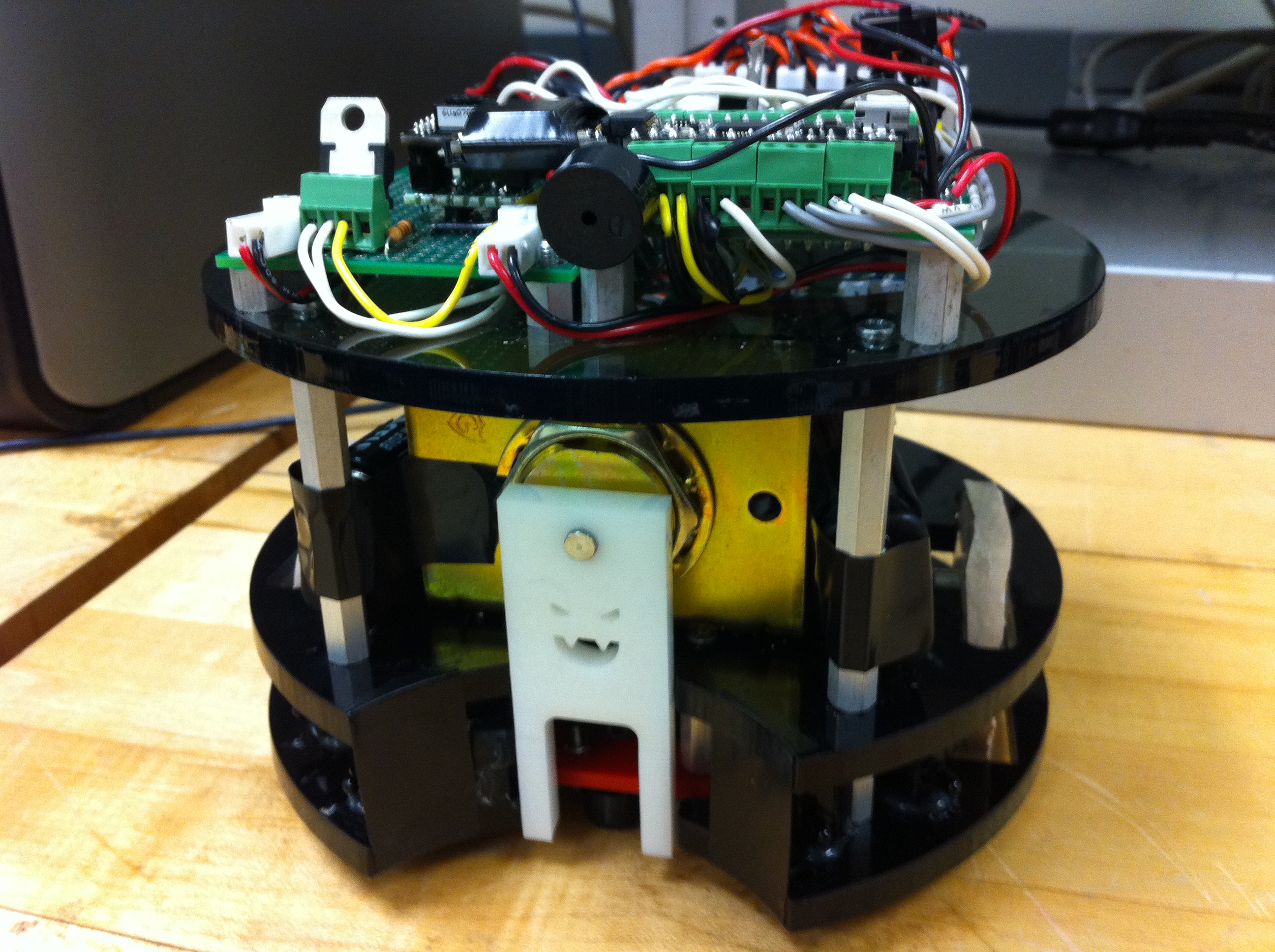

For my final project in my mechatronics class I worked with Michael Posner and Sydney Jackopin to build 3 hockey-playing robots. To find out more competition details check out www.robockey.com. We made it to the quarter finals. I primarily worked on the entire electrical portion, but also helped with parts of the mechanical and software portions as well. Here's a compilation of our matches:

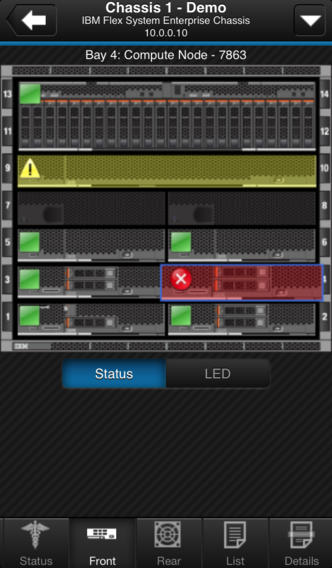

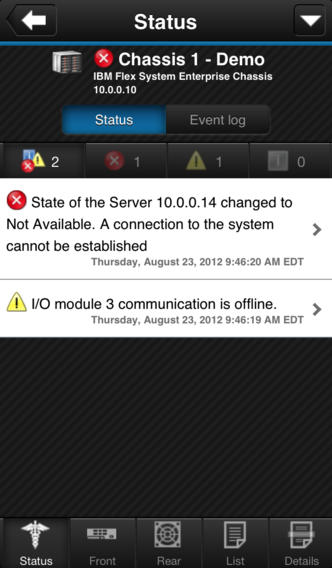

I spent ~1.25 years at IBM: Systems and Technology group as a software engineer developing mobile applications for server management, specifically for the Flex Systems offering. Using Dojo and Cordova we built a JavaScript-based "native" application that ran on iOS, Android, and BlackBerry phones and tablets. The app allowed users to monitor and control (turn on/off/reboot) their servers. The Flex group has since been sold to Lenovo, but here are some screenshots of the product.

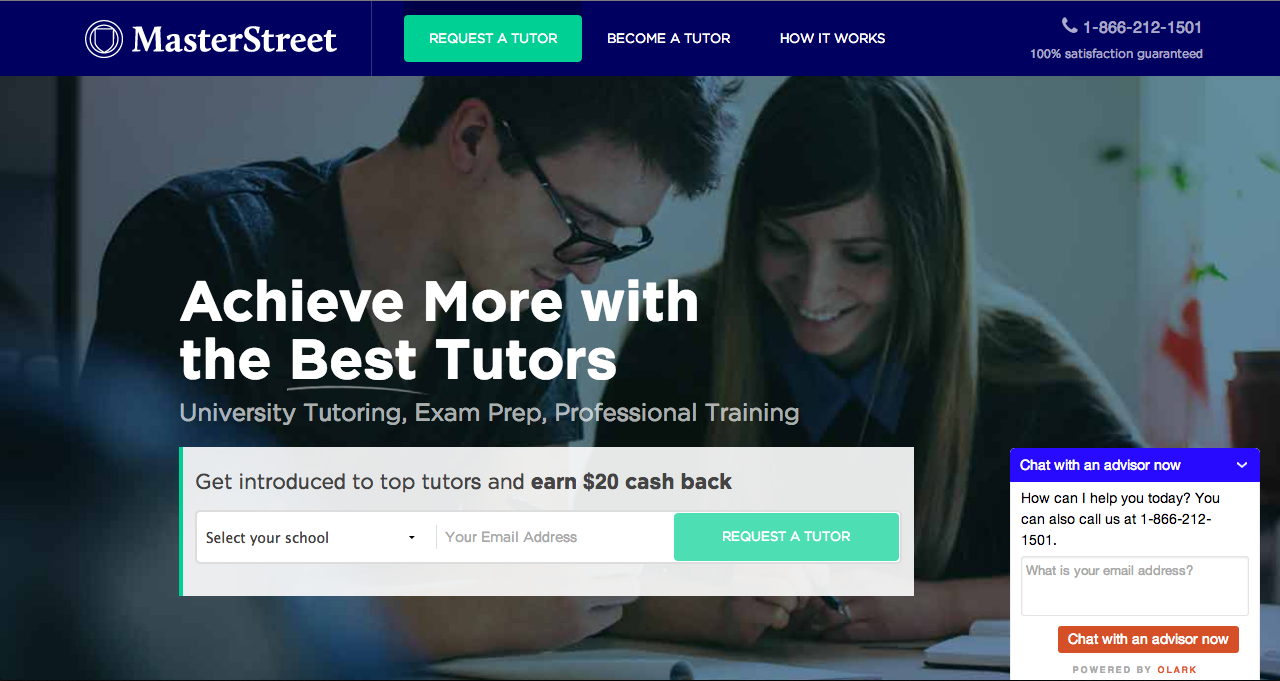

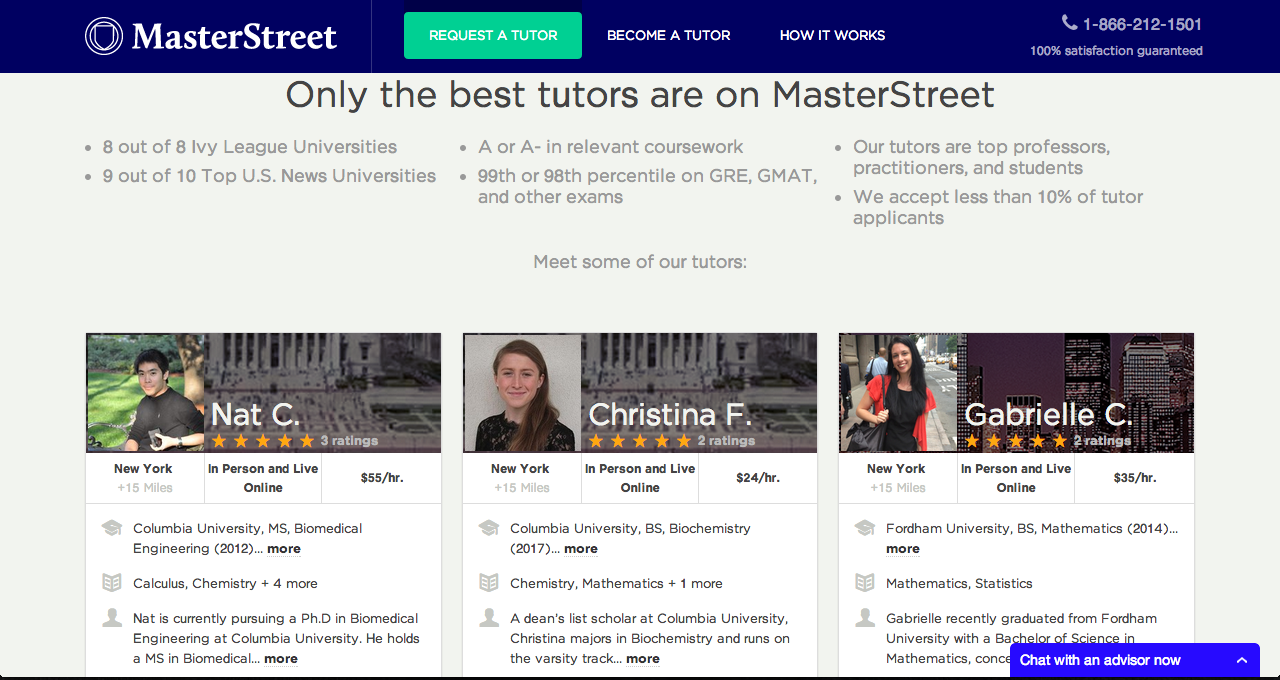

I spent a year in NYC working at a small startup to help working professionals find continuing education opportunities. I worked on both front-end and back-end components using Ruby on Rails, AWS, ElasticSearch, AngularJS, etc. Below are some screenshots of the tutors-version of our product (there were many previous iterations as well).

While in high school I joined the Intelligence in Action Lab at the University of Colorado, Boulder (CU) for 2 summers, supervised by Prof. Gregory Grudic. During the first summer I worked on optimizing/speeding up vision code for the DARPA LAGR robot (pictured above on the left). The goal of the DARPA LAGR competition was to push robot perception and navigation in large outdoor environments. All teams were provided with the same LAGR vehicles whose primary means of sensing were 2 fixed stereo-cameras, a GPS, accelerometer, and encoders.

During my second summer in the group, I built a small-scale and cheaper platform for future lab research (pictured above on the right). The base platform was purchased from superdroidrobots.com. I then added wheel encoders, motor drivers, compass, and GPS units as well as a microcontroller from BrainStem. I also implemented an API for interfacing higher-level code with the robot in order to control robot motion and retrieve sensor information.

For more information on the DARPA LAGR competition please see the wiki page.